The ever growing capacity of computers and their lower cost has facilitated the development of predictive systems of incredible precision, applicable in multiple fields, from finance markets to medicine. But this larger capacity, because of the higher complexity of predictive models, entails a higher difficulty to explain the results obtained or why certain conclusion has been reached. There are models easily explained, but they are simpler and less precise. We must decide in each case the trade off between precision and explainability.

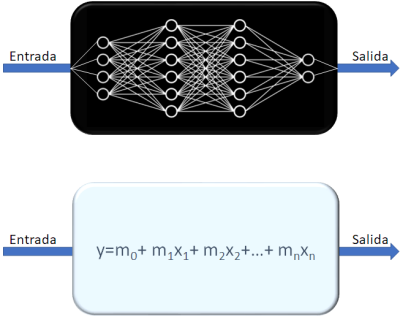

Black-box systems, like the deep-learning systems (neural networks), can have thousands, millions of internal parameters, and they perform as many operation in each prediction, being practically impossible to interpret why the system reaches one conclusion or another. White or transparent box systems, on the other hand, are relatively easy to explain, and it is possible to follow the reasoning path of the model, or to compute the weight of each variable in the final decision. All machine learning algorithms used fall between the extremes of the unexplainable, like neural networks, and the totally transparent, like a linear regressions. The choice is ours.

If we develop a complex system to estimate customer churn risk, for instance, we will know which customers are at risk with high precision, but we will not know why, which is the source of their unsatisfaction, and it is better if the sales team investigates the reason and decide a course of action rather than triggering an automatic price cut, because the system does not know that customers complain about bad deliveries, for instance. The simpler the model, the easier it will be to explain its decisions, but also less precise, so if we use a simple regression to estimate the credit risk of a customer, we will understand perfectly how each variable contributes, but it might not be very precise, and we might as well make our decision by rolling some dice.

There is high interest in developing explainable AI, and big players, like Google or IBM, already have products yielding interesting results; and if we have a tight budget, there are simple creative solutions to obtain equally simple explanations. It will be our particular need, our context, what dictates where do we stand in terms of precision or explainability.

Image rights:

Image by fullvector on Freepik

Image by Kaziislam691 on Wikipedia Commons