We continue with our series of plain language articles, explaining Artificial Neural Networks, perhaps the most “mystical” form of machine learning, also known as deep machine learning.

Artificial neural networks are something new for a large majority, but research on artificial models of the brain began at the same time as the first computers; the photo-perceptron, which can be considered the first neuron for an artificial vision system, was introduced in 1958; the multilayer perceptron, still considered the basic neural network, followed suit, solving the limitations of single neurons; and modern neural network training techniques began to be developed in the 1980s, although the mathematical foundations date back centuries and their first computer applications date back to the 1970s.

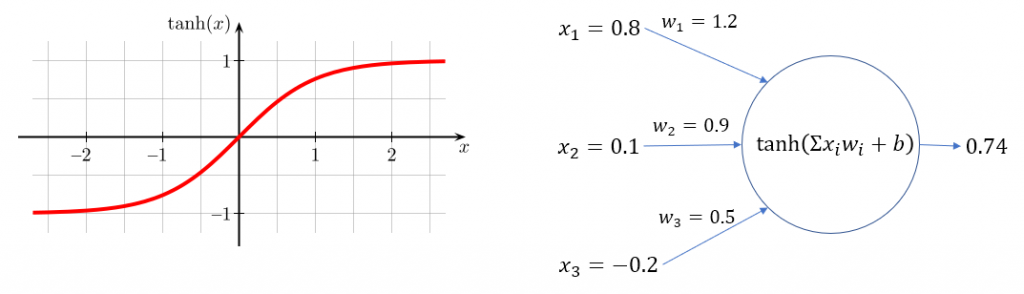

A simple neuron is like a functions that receives stimuli (x) from outside or from other neurons, through connections with a certain strength (w), and returns the result of applying the function to the combination of stimuli and weights. The original perceptron used a simpler function than the hyperbolic tangent of the image, but very similar nonetheless.

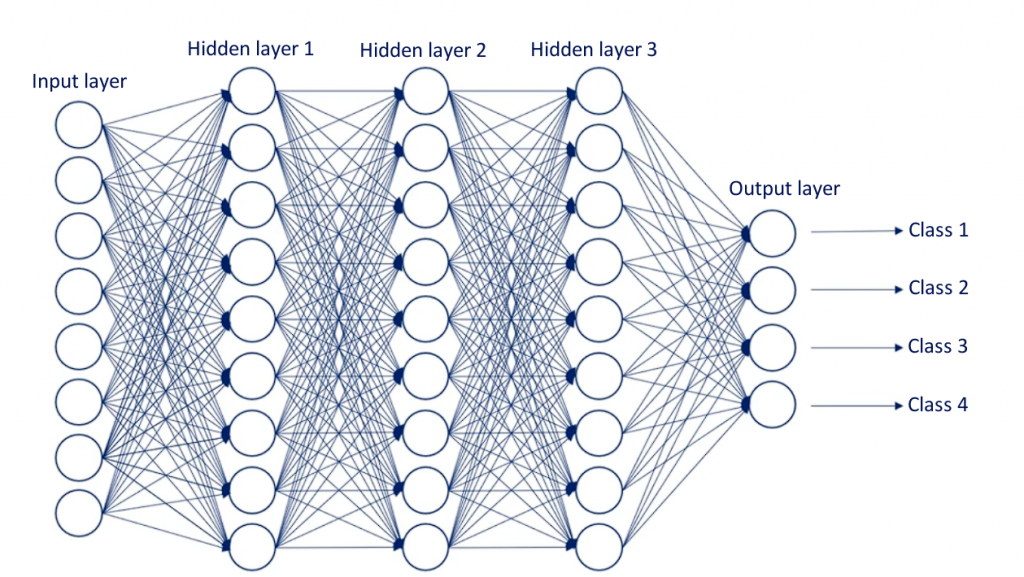

A multilayer perceptron is a structure of layered neurons, such that the value returned by each neuron becomes a stimulus that is fed to the neurons of the next layer, each one with its weight (the strength of the neuronal connection). Between the input and output layers there can be several hidden layers. We can picture the network like a large Excel file in which certain cells are neurons whose content is a formula that combines the values of other “neuron cells” with “weight cells” (one weight per connection), with “weight cells” holding only numeric values, no formulae.

We train the network by adjusting the weights of the connections -the Excel cells that only hold numeric values- until we obtain the desired output for each set of input values. This simple network has over 300 parameters or weights to adjust, but don’t panic: there are algorithms that train the network and take care of the adjustments for us, tweaking the weights in the direction of reducing the error (the difference between the output we get and the correct answer). The error will be higher in the early training cycles, but it will gradually decrease until we achieve an almost perfect classification.

Such a network could be trained, for instance, to qualify commercial leads, for what we will need data saved from previous campaigns. We need input data, such as the channel that generated the lead, the size of the customer, the expected buying delay, … up to the 8 network input parameters; and we need output data, like a tag indicating if that lead became a loyal customer, an eventual customer, a one-time customer, or if it never bought anything, that is, our 4 output classes. Once the network has been trained, we just have to feed it the data of a new lead and it will tell us if we should pursue the lead to death or if we should drop it and save our efforts. Interesting, isn’t it? As in all machine learning, we depend on having enough quality data to train the network, and we won’t know exactly why one lead is better than another, but we will sure know how good it is.

This is how basic artificial neural networks work, but there are much more sophisticated architectures, which we may discuss in another article. There are networks that can directly process unstructured data, such as image or natural language, and recognize it; that can generate artificial images of faces or landscapes; that can animate the faces of individuals that have already disappeared, or replicate the face of a living person; but deep, deep inside (deep learning pun intended), they all learn by adjusting connection weights, little by little, repeatedly, seeking to reduce the distance between their answer while training and the correct response. After all, repetition is still the basis of learning.

At Melioth Digital Services we can help you by designing and developing ANNs to solve your real business problems, not science

We train the network by adjusting the weights of the connections, until we obtain the desired output for each set of input values. This simple network has over 300 parameters or weights to adjust, but don’t panic: there are algorithms that train the network and take care of the adjustments for us, tweaking the weights in the direction of reducing the error (the difference between the output we get and the correct answer). The error will be higher in the early training cycles, but it will gradually decrease until we achieve an almost perfect classification.

Such a network could be trained, for instance, to qualify commercial leads, for what we will need data saved from previous campaigns. We need input data, such as the channel that generated the lead, the size of the customer, the expected buying delay, … up to the 8 network input parameters; and we need output data, like a tag indicating if that lead became a loyal customer, an eventual customer, a one-time customer, or if it never bought anything, that is, our 4 output classes. Once the network has been trained, we just have to feed it the data of a new lead and it will tell us if we should pursue the lead to death or if we should drop it and save our efforts. Interesting, isn’t it? As in all machine learning, we depend on having enough quality data to train the network, and we won’t know exactly why one lead is better than another, but we will sure know how good it is.

This is how basic artificial neural networks work, but there are much more sophisticated architectures, which we may discuss in another article. There are networks that can directly process unstructured data, such as image or natural language, and recognize it; that can generate artificial images of faces or landscapes; that can animate the faces of individuals that have already disappeared, or replicate the face of a living person; but deep, deep inside (deep learning pun intended), they all learn by adjusting connection weights, little by little, repeatedly, seeking to reduce the distance between their answer while training and the correct response. After all, repetition is still the basis of learning.

At Melioth Digital Services we can help you by designing and developing ANNs to solve your real business problems, not science fiction.

Image by GarryKillian on Freepik